Web scraping – How it collects public data and what you can do with it

Web scraping, also known as harvesting, is becoming a more popular term. This is due to the many benefits it offers both businesses and individuals. It’s becoming more important to find effective ways to collect public data, as businesses increasingly rely on it to make informed business decisions. What is web harvesting and what are its benefits?

This article will take a closer look into web harvesting and all it can do. We will also examine the role that a parser, as well as a location-specific proxy (such UK proxy), play in this process.

When it comes to web harvesting, we’ll be talking about the following topics:

What is Web Scraping?

Web harvesting allows you to automatically collect large amounts of public data from many websites. The information is then combined into a single format such as a spreadsheet so that it can be organized and used according to user’s requirements. You should only collect public data. You can view data when you access a website, without having to sign in, captcha or complete sign-ins. Do not attempt to gather private data, or behind a sign in. This is not considered public and could lead to legal consequences.

These programs are web scrapers. Simply enter the data that you wish to collect and any other parameters. The program will scan different websites and gather the relevant information. If you are a programmer, it is possible to create your own tool. You can also use pre-built tools like Octoparse and Smart Scraper, which don’t require any programming knowledge.

AI is one of the biggest tech news. We are still only in the early days of the development of AI. As the technology becomes more sophisticated, it will be applied to further develop tech-based tools, such as training machines to recognize patterns, and then act upon what it has detected. It can develop your best business times idea and you can succeed in your life goal.

What Role Does Parsing Play in Web Scraping?

Web harvesting requires data parsing. However, it is often overlooked. It’s not difficult to forget that commercial web scrapers have a built in parser. The parser converts the website’s code into a user-friendly language. Your web scraper will collect data in the form code snippets (computer languages). The code snippets will not make sense by themselves. These code snippets are taken by the parser and “translated” into human language. The data you collect will not make sense without a parser.

What can be done with web scraping?

A web scraper can do a lot of things. Your imagination is the only limit to what data you can collect and how you use them. A web scraper can be used by businesses to conduct market research and make critical decisions. Web harvesting can also be used by individuals to locate the best products and investment opportunities.

One way web scraping can be used is:

Price monitoring

Monitoring market trends

Machine learning enrichment

Financial data aggregation

Monitoring consumer sentiment

Follow the news

Discovering investment opportunities

Lead generation

Monitoring competitors

Academic research

Increase SEO and SERP

Tools Required for Web Scraping

Two tools are essential for web harvesting success. The web scraping tool is the first. You can still collect data by visiting websites and recording your findings manually, but this is slow and inefficient and will result in a lot wasted time. A tool automates the process and can collect all the data that you need. You have a wide range of web scraping tools to choose from.

A residential proxy is the next tool you will need. These proxies can help you bypass geo-restrictions and allow you to collect more data. To target specific countries and collect data, you can use location-specific proxy such as UK proxies. These UK proxies, and other residential proxy services, hide your IP number to ensure online anonymity. Your IP address is replaced with a link to a real device, making it appear like a live user. This will help ensure your web scraper is not banned. Banned data can lead to inaccuracies and incomplete information.

Final Thoughts

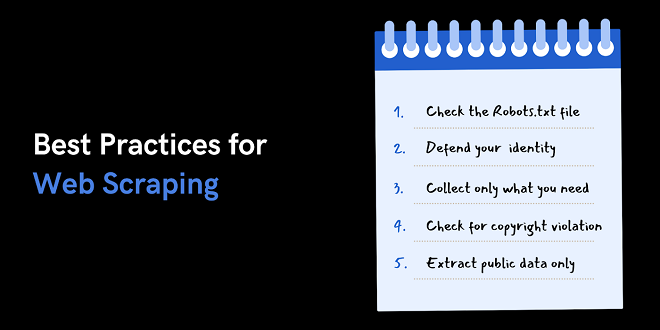

Both individuals and businesses can benefit from web scraping. There are some things you should remember when using web harvesting legally, efficiently and effectively. First, you should only ever scrape public information and not collect any personal data. Respect the data you collect, and treat it as such. To prevent your efforts being banned, you should pair a web scraping tool with a trusted residential proxy.